- The button is currently only visible within an unmanaged solution or the default solution. This seems to be because managed solutions are not directly editable, though I think this is a bit of an oversight, because...

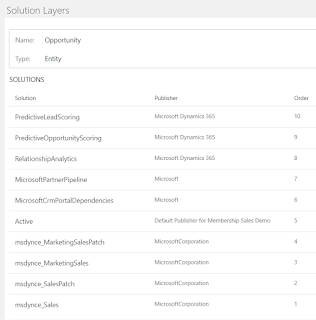

- When you click the button for a solution component, it will display any managed solutions that contain that component, along with an 'Active' solution. From what I can tell so far, I'm treating the 'Active' solution to be the same as the default solution, but there may be subtle differences

- It makes sense that this only displays managed solutions, as these are the only one solutions that a individually layered. In contrast, unmanaged solutions are all combined into the one unmanaged layer

Sunday, 24 March 2019

Solution Layers

Saturday, 31 March 2018

Common Data Services Architecture in CDS 2.0

Microsoft have made several recent announcements in March 2018, but for me the most significant is the PowerApps Spring Update. This may seem strange for me, a CRM MVP, to say, given how much there was on CRM in the Business Applications Spring ’18 Release Notes, but I think it makes sense once you realise that the PowerApps Update describes the new and future Common Data Services (CDS) architecture, and that in this architecture, much of CDS is the CRM platform (aka xRM).

Rather than CDS being a separate layer or component that then communicates with the CRM platform, CDS and CRM are a shared platform.

Strictly, it's not quite as simple as the last sentence makes out, especially as CDS now splits into Common Data Service for Applications and Common Data Service for Analytics (I'm hoping we'll soon get good acronyms to distinguish these), but for now it's worth emphasising that, if using Common Data Service for Applications, you are directly using the same platform components that CRM uses. This has several major implications (all of which are good to my mind):

- CDS for Apps can fully use the CRM platform features, such as workflow, business process flows, calculated fields. This immediately makes CDS a hugely powerful platform, but also means there are no decisions to take on which platform to use, or differences to take into account, because they are the same platform

- There are no extra integration steps. Commissioning a CDS environment will give you a CRM organisation, and equally, commissioning a CRM organisation will give you a CDS environment. This is not a duplication of data or platforms, because again, they are the same platform

Thursday, 29 March 2018

Concurrent or Consistent - or both

Am I an optimist

So, what is it about ? Concurrency control is used to ensure data remains consistent when multiple users are making concurrent modifications to the same data. The two main models are pessimistic concurrency and optimistic concurrency. The difference between the 2 can be illustrated by considering two users (Albert and Brenda), who are trying to update the same field (X) on the same record (Y). In each case the update is actually 2 steps (reading the existing record, then updating it), and Albert and Brenda's try and do the steps in the following time sequence:

- Albert reads X from record Y (let's say the value is 30)

- Brenda reads record Y (while it's still 30)

- Albert updates record Y (Albert wants to add 20, so he updates X to 50)

- Brenda updates record Y (she wants to subtract 10, so subtracts 10 from the value (30) she read in step 2, so she updates X to 20)

- Albert reads X from record Y (when the value is 30), and the system locks record Y

- Brenda tries to read record Y, but Albert's lock blocks her read, so she sits waiting for a response

- Albert adds 20 to his value (30), and updates X to 50, then the system releases the lock on Y

- Brenda now gets her response, which is that X is now 50

- Brenda subtracts 10 from her value (50), and updates X to 40

- Albert reads X (30) from record Y (when the value is 30), and also reads a system-generated record version number (let's say it's version 1)

- Brenda reads record Y (while it's still 30), and the system-generated record version number (which is still version 1, as the record's not changed yet)

- Albert adds 20 to his value (30), and updates X to 50. The update is only permitted because Albert's version number (1) matches the current version number (1). The system updates the version number to 2

- Brenda subtracts 10 from her value (30), and tries to update X to 20.This update is not permitted as Brenda's version number (2) does not match the current version number (1). So, Brenda will get an error

- Brenda now tries again, reading now read the current value (50) and version number (2), then subtracting 10, and the update is allowed

- In the Entity instance that you are sending to the Update, ensure the RowVersion property is set to the RowVersion that you received when you read this record

- In the UpdateRequest, set the ConcurrencyBehavior to IfRowVersionMatches

- Handle any exceptions. If there is a row version conflict (as per my optimistic scenario above), then you get a ConcurrencyVersionMismatch exception.

Notes

- During CRM implementatons, if asked 'Can we do X in CRM ?', I very rarely just so no, and I'm more likely to say no for reasons other than purely technical ones. However, when I've been asked to prevent concurrent access to records, then this is a rare case when I go for the short answer of 'no'

- We can get a little bit of control over locking within a synchronous plugin, as this runs within the CRM transaction. This is the basis of the most robust CRM-based autonumber implementations. However, the lock can't be held outside of the platform operation

- My examples have concentrated on updating a single field, but any talk of locking or row version is at a record level. If Albert and Brenda were changing different fields, then we may not have a consistency issue to address. However, for practical reasons, any system applies locks and row versioning at a record, and not field level. Also, even if the updates are to different fields, it is possible that the change they make is dependent on other fields that may have changed, so for optimistic concurrency we do get a ConcurrencyVersionMismatch if any fields had changed

Friday, 6 December 2013

Crm 2013 – No more ExtensionBase tables

'new_entity0'.new_entityId as 'new_entityid'

, 'new_entity0'.OwningBusinessUnit as 'owningbusinessunit'

, 'new_entity0'.OwnerId as 'ownerid'

, 'new_entity0'.OwnerIdType as 'owneridtype'

from new_entity as 'new_entity0'

where ('new_entity0'.new_entityId = @new_entityId0)

where ([new_entityId] = @new_entityId1)

These were deadlocked, with the SELECT statement being the deadlock victim. The locks that caused the deadlock were:

-

The SELECT statement had a shared lock on the new_entityExtensionBase table, and was requesting a shared lock on new_entityBase table

- The UPDATE statement had an update lock on the new_entityBase table, and was requesting an update lock on new_entityExtensionBase table

-

Although the SELECT statement was requesting fields from the new_entityBase table, it had obtained a lock on the new_entityExtensionBase table to perform the join in the new_entity view

- The UPDATE statement that updates a custom attribute (new_attribute) on the new_entity entity would have been the second statement of 2 in the transaction. The first statement would modify system fields (e.g. modifiedon) in the new_entityBase table, and hence place an exclusive lock on a row in the new_entityBase table, and the second statement is the one above, which is attempting to update the new_entityExtensionBase table

-

With just the one entity table, the SELECT statement only needs one lock, and does not need to obtain one lock, then request another

- Only one UPDATE statement is required in the transaction, so locks are only required on the one table and they can be requested together, as they would be part of just one statement

- Both operations will complete more quickly, reducing the time for which the locks are held

Wednesday, 17 November 2010

How to use impersonation in an ASP .Net page using IFD in CRM 4.0

The fundamental requirement is to create a custom ASP .Net page that is accessible both internally (via AD authentication) and over the Internet (via IFD authentication), and where the code will access CRM data under the context of the user accessing the page. To do this, you need to deploy and configure your code as follows:

- Deploy the ASP .Net page within the CRM Web Site (the only supported place is within the ISV directory). If you don't do this, then IFD authentication will not apply to your page

- Run the ASP .Net page within the CrmAppPool, and do not create an IIS application for it. If you don't do this, then you won't be able to identify the authenticated user

- Ensure that the CRM HttpModules MapOrg and CrmAuthentication are enabled. This will happen by default by inheritance of the settings from the root web.config file in the CRM web site, but I'm mentioning it here as there are some circumstances (when you don't need IFD) in which it is appropriate to disable these HttpModules. Again, if the HttpModules aren't enabled, then you won't be able to identify the authenticated user

- As your code is in a virtual directory (rather than a separate IIS application), ASP .Net will look for your assemblies in the [webroot]\bin folder, so that is where you should put them (or in the GAC). The initial release documentation for CRM 4.0 stated that it was unsupported to put files in [webroot]\bin folder of the CRM web site, but this restriction has been lifted

You also need to follow certain coding patterns within your code. An example of these can be found here. Note that, Crm web services refers to both the CrmService and the MetadataService:

- Ensure you can identify the organisation name. The example code shows how to parse this from the Request.Url property, though I prefer to pass this on the querystring (which the example also supports)

- Use the CrmImpersonator class. All access to the Crm web services needs to be wrapped within the using (new CrmImpersonator()) block. If you don't do this you will probably get 401 errors, often when accessing the page internally via AD authentication (see later for a brief explanation)

- Use the ExtractCrmAuthenticationToken static method. This is necessary to get the context of the calling user (which is stored in the CallerId property)

- Use CredentialCache.DefaultCredentials to pass AD credentials to the Crm web services. If you don't do this, then you will probably get 401 errors as you'd be trying to access the web service anonymously (IIS would throw these 401 errors)

That should be all that you need on the server side. The final piece of the puzzle is to ensure that you provide the correct Url when accessing the page, which again needs a little consideration:

When accessing the page from an internal address, the Url should be of the form:

http://[server]/[orgname]/ISV/MyFolder/MyPage.aspx

When accessing the page from an external address, the Url should be of the form:

http://[orgname].[serverFQDN]/ISV/MyFolder/MyPage.aspx

This is relatively easy to achieve when opening the page from within CRM (i.e. in an IFrame, via an ISV.config button or in client script). In each case you can use the PrependOrgName global function in client script - e.g.

var u = PrependOrgName('/ISV/MyFolder/MyPage.aspx');

This function will determine correctly whether to add the organisation name to the Url. Note also that I've provided a relative Url, which will ensure the first part of the Url is always correct. As this uses a JavaScript function, you will always need to use a small piece of code to access the page, and cannot rely on statically providing the Url in the source of an IFrame, or in the Url attribute of an ISV.Config button. Any relative Urls in SiteMap should automatically get the organisation name applied correctly. Remember to also pass the organisation name on the querystring if the server code expects this (you can get the organisation name from the ORG_UNIQUE_NAME global variable)

Earlier I promised an explanation of what the server code does. This is not as complete an explanation as it could be, but the basics are:

- The HttpModules identify the correct CRM organisation (MapOrg) from the Url provided, and place information about the authenticated calling user in the HttpContext (CrmAuthentication)

- The ExtractCrmAuthenticationToken method reads the user context from the HttpContext, and puts the user's systemuserid in the CallerId property of the CrmAuthenticationToken

- Because the CallerId is set, the call to CRM is necessarily using CRM impersonation. For this to be permitted, the execution account (see Dave Berry's blog for a definition) must be a member of the AD group PrivUserGroup. The execution account is the AD account that is returned by CredentialCache.DefaultCredentials. This is where things get a little interesting

- If the request comes via the external network and IFD authentication is used, CRM handles the authentication outside of IIS and no IIS / ASP .Net impersonation occurs. Therefore CredentialCache.DefaultCredentials will return the AD identity of the process, which is the identity of the CrmAppPool, which necessarily is a member of PrivUserGroup

- However, if the request comes via the internal network, AD authentication is used and IIS / ASP .Net impersonation does occur (through the

setting in web.config). This impersonation will change the execution context of the thread, and CredentialCache.DefaultCredentials would then return the AD context of the caller. This is fine in a pure AD authentication scenario, but the use of the ExtractCrmAuthenticationToken method means that CRM impersonation is necessarily expected; this will only work if the execution account is a member of PrivUserGroup, and CRM users should not be members of PrivUserGroup. This is where the CrmImpersonator class comes in: its constructor reverts the thread's execution context to that of the process (i.e. it undoes the IIS / ASP .Net impersonation), so that CredentialCache.DefaultCredentials will now return the identity of the CrmAppPool, and the CRM platform will permit CRM impersonation

To finish off, here are a few other points to note:

- IFD impersonation only applies when accessing the CRM platform. If you use IFD authentication, there is no way of impersonating the caller when accessing non-CRM resources (e.g. SQL databases, SharePoint, the file system); it cannot be done, so save yourself the effort and don't even try (though, for completeness, SQL impersonation is possible using EXECUTE AS, but that's it)

- If you want to use impersonation, do not use the CrmDiscoveryService. The CrmDiscoveryService can only be used with IFD if you know the user's username and password, and you won't know these unless you prompt the user, which kind of defeats the point of impersonation

Thursday, 11 November 2010

When is the Default Organisation not the Default Organisation

However, this does not always do what some people expect. CRM has 2 distinct concepts of a 'Default Organisation', and only one of them can be set using Deployment Manager. The 'Default Organisation' that you set in Deployment is a system-wide setting whose primary use is to define which organisation database is used by code that accesses CRM 4.0 through the CRM 3.0 web services (CRM 3.0 did not support multiple organisations, so the web services had no way to specify the organisation to connect to).

The other type of 'Default Organisation' applies to users. Each user has a Default Organisation, which is the organisation that they are connected to if browsing to a Url that does not contain the organisation name. For example, if a user browses to http://crm/Excitation/loader.aspx, then the user will necessarily be taken to the Excitation organisation, but if they browse to http://crm/loader.aspx, they will be taken to their default organisation, which has no relationship to the 'Default Organisation' that is set in Deployment Manager. Each user's default organisation will be the first organisation in which their CRM user record was created.

One issue that can arise is if a user connects using a Url that does not contain the organisation name, and either their default organisation has been disabled, or their user account in their default organisation has been disabled. In this scenario, the user would receive either the error 'The specified organization is disabled' or 'The specified user is either disabled or is not a member of any business unit'. The simplest solution would be to specify an appropriate organisation name in the Url; however if this is not possible, the rest of this post describes an unsupported alternative.

Unfortunately, none of the MSCRM tools will display a user's default organisation, nor is there a supported way to change this (though there is an unsupported way - see below). All the information is stored in the MSCRM_Config database, in the SystemUser, Organization and SystemUserOrganizations tables. The following output shows some of the relevant data from these tables:

SystemUser table

Organization table

SystemUserOrganizations table

The DefaultOrganizationId field in SystemUser defines each user's default organisation, and this can be joined to the Id field in the Organization table.

The fields that define the user are a little more complex: The Id field in SystemUser is unique to each CRM user. You can join the SystemUser table to the SystemUserOrganizations table using the SystemUser.Id and the SystemUserOrganizations.UserId fields. The CrmUserId field in SystemUserOrganizations can be joined to the systemuserid field in the systemuserbase table in each CRM organisation database. Note that the same user will have a different systemuserid in each organisation database. The following query illustrates these joins, taking the user's name from one of the organisation databases (it's not stored in MSCRM_Config):

select o.UniqueName, u.FullName

from Organization o

join SystemUser su on o.Id = su.DefaultOrganizationId

join SystemUserOrganizations suo on su.Id = suo.UserId

join Excitation_MSCRM..systemuser u on suo.CrmUserId = u.systemuserid

So, that's how it fits together. As this is all SQL data, it is not difficult to modify this, but be aware that to do so is completely unsupported, and could break your CRM implementation. If you were to make any changes, make sure you backup the relevant databases (especially MSCRM_Config) before you do so.

If you did want to change a user's default organisation, please heed the warning in the preceding paragraph and backup the MSCRM_Config database. The following SQL update statement will change the default organisation of a given user, based on their systemuserid in one organisation database. The reason for writing the query this way is to ensure that a user's default organisation can only be set to an organisation that they exist in, and this query should only ever modify one record. If it modifies 0 records, then check the @systemuserid value, and if it modifies more than one record then your MSCRM_Config database is probably corrupt, and you should reinstall CRM and reimport your organisation databases (I was serious about my warnings).

declare @systemuserid uniqueidentifier

set @systemuserid = '25E1DC1D-BEC2-449B-AAD8-4A6309122AE1' -- replace this

update SystemUser

set DefaultOrganizationId = suo.OrganizationId

from SystemUserOrganizations suo

where suo.UserId = SystemUser.Id

and suo.CrmUserId = @systemuserid

One final point; CRM caches some of the data in MSCRM_Config, so you'd need to recycle the CRM application pool to sure any changes have taken effect.

Tuesday, 9 November 2010

The CRM 4.0 Reporting Services Connector - how it works

How the Connector is installed and invoked

The connector is installed as a Data Processing Extension with SSRS. These extensions are registered within the rsreportserver.config file on the Reporting Server, as per the following snippet:

<Data>

<Extension Name="SQL" Type="Microsoft.ReportingServices.DataExtensions.SqlConnectionWrapper,Microsoft.ReportingServices.DataExtensions" /> <Extension Name="OLEDB" Type="Microsoft.ReportingServices.DataExtensions.OleDbConnectionWrapper,Microsoft.ReportingServices.DataExtensions" />

<Extension Name="MSCRM" Type="Microsoft.Crm.Reporting.DataConnector.SrsExtConnection,Microsoft.Crm.Reporting.DataConnector" /> </Data>

All CRM reports with SSRS are configured to use a specific SSRS Data Source. When the Connector is installed, the Data Source is changed to use the MSCRM Data Processing Extension, instead of the default SQL Server extension. See images below:

Report properties showing the MSCRM Data Source

MSCRM Data Source using the SQL Server extension before the connector is installed

MSCRM Data Source using the CRM extension after the connector is installed.

There are 3 differences between these configurations:

- The Connection Type, which specifies the extension

- The Connection String is absent with the CRM connector. This is because the connector reads some of the database information from registry values that were created during its installation, and some from data passed to it when the report is run (see below)

- The Credentials. With the SQL Server connector, standard Windows Integrated security is used - i.e. the user's AD credentials are used to connect to SQL Server. With the CRM connector, separate 'credentials' are passed to SSRS (again, see below)

What happens when a report is run

If you try to run a CRM report with the CRM connector installed, the connector will require some 'credentials', as per point no.3 above. This image shows what happens if you try to run a report from Report Manager:

Running a CRM report from Report Manager when the CRM connector is installed

As the report uses a data source that uses the CRM connector, the RS Report Server code calls the CRM connector code (the SrsExtConnection class in the Microsoft.Crm.Reporting.DataConnector assembly, as per the rsreportserver.config data above). The code will then:

- Check that it is permitted to impersonate a CRM user. This checks that the identity the code is running under (which the identity of the ReportServer application pool, or the Reporing Services service, depending on the version of Reporting Services) belongs to the AD group PrivReportingGroup

- Connect to the MSCRM_Config database to determine the correct MSCRM organization database, based on the organizationid that was passed in the 'credentials'

- Connect to the relevant MSCRM organization database. Note that this is done (as was the previous step) using integrated security under the AD identity as per step 1 above

- Use the SQL statement SET Context_Info to pass the calling CRM user's systemuserid into the Context_Info

- Execute the SQL statement(s) within the report definition. The definition of all filtered views use the fn_FindUserGuid function to read the systemuserid from the Context_Info

What can you do with this information

One use is for troubleshooting. Checking the rsreportserver.config is a quick way to see if the connector is installed, and checking the configuration of the MSCRM Data Source will tell you if the connector is in use. Changing the MSCRM Data Source is a quick way to turn the connector on or off for test purposes.

You can also run the reports directly, rather than from CRM. Again, when troubleshooting I find it useful to run a report directly from Report Manager web interface. To do this with the connector, you need to enter the systemuserid and organizationid when prompted (see image above). These values can be read from the filteredsystemuser and filterorganization views respectively in the MSCRM database.

A further option is to run the reports via other means, such as Url Access, as described here (that article was written for CRM 3, see here for an update for CRM 4). To do this with the connector installed, you will also have to pass the systemuserid and organizationid on the query string. This is done using the following syntax:

&dsu:CRM=<systemuserid>&dsp:CRM=<organizationid>